使用 spring AI + ollama 调用 DeepSeek 模型

工程搭建

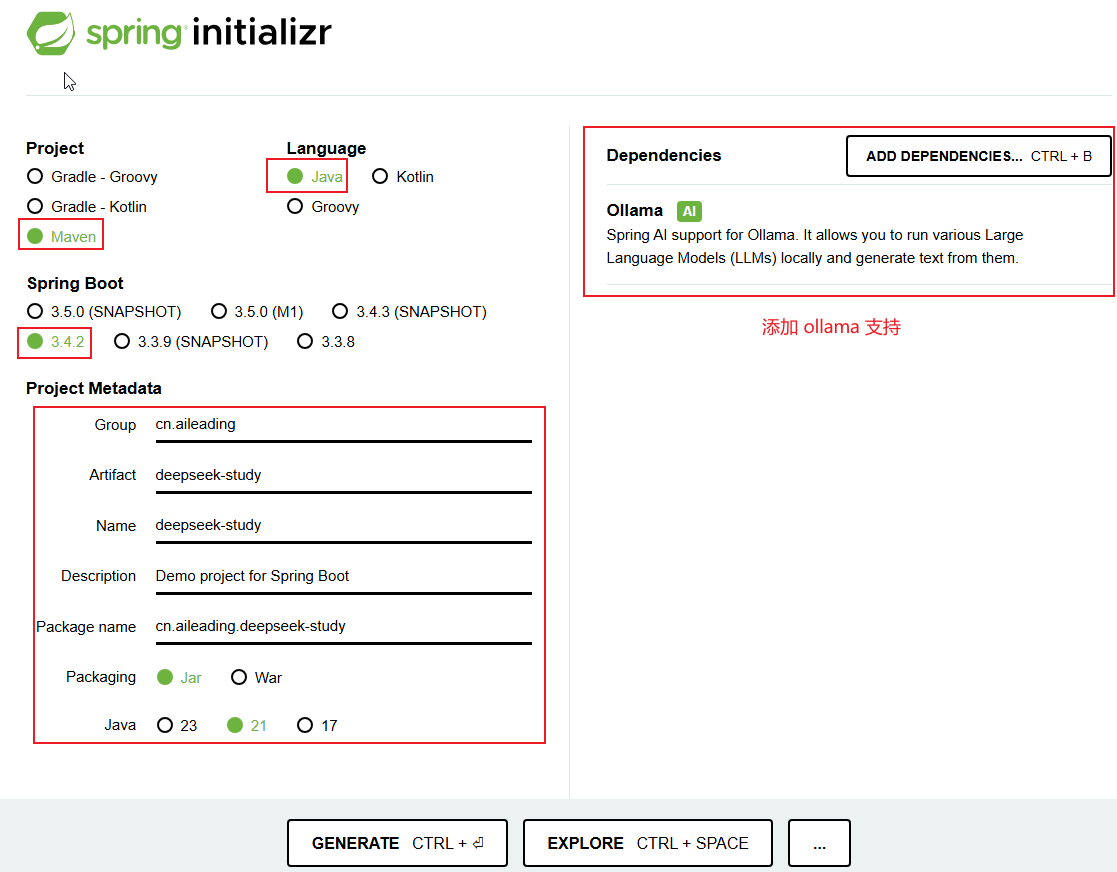

在 SpringBoot 脚手架页面 按照下图所示进行参数选择,之后点击“GENERATE”生成代码包。

解压该包,引入 IDEA。

代码编写

spring-ai-ollama 官方文档:https://docs.spring.io/spring-ai/reference/api/chat/ollama-chat.html

根 pom.xml

xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.4.2</version>

<relativePath/>

</parent>

<groupId>cn.aileading</groupId>

<artifactId>deepseek-study</artifactId>

<version>0.0.1-SNAPSHOT</version>

<properties>

<java.version>21</java.version>

<spring-ai.version>1.0.0-M5</spring-ai.version>

</properties>

<!-- 添加里程碑版本仓库 -->

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<dependencyManagement>

<dependencies>

<!-- 添加 ai-code-bom 统一管理 ai-code 版本 -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<!-- ai-code-ollama -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

</dependency>

<!-- web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>application.properties 配置文件

spring.application.name=deepseek-study

spring.ai.ollama.base-url=http://127.0.0.1:11434

spring.ai.ollama.chat.options.model=deepseek-r1:32b说明:列出最核心的两个配置,更多配置项见 spring-ai-ollama 文档

- spring.ai.ollama.base-url:ollama 服务的基础 url

- spring.ai.ollama.chat.options.model:指定使用的 ollama 服务

controller:

java

package cn.aileading.deepseek_study.web;

import jakarta.annotation.Resource;

import org.springframework.ai.ollama.OllamaChatModel;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import java.util.Map;

@RestController

@RequestMapping("/")

public class Controller {

@Resource

private OllamaChatModel chatModel;

@GetMapping("/ai/generate")

public Map<String,String> generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return Map.of("generation", this.chatModel.call(message));

}

}启动器:

java

package cn.aileading.deepseek_study;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class DeepseekStudyApplication {

public static void main(String[] args) {

SpringApplication.run(DeepseekStudyApplication.class, args);

}

}启动应用

启动应用,浏览器访问,http://127.0.0.1:8080/ai/generate?message=0.8和0.11谁大 ,查看输出即可

文章的最后,如果您觉得本文对您有用,请打赏一杯咖啡!感谢!